This research additionally calls out LM Enviornment for what seems to be a lot larger promotion of personal fashions like Gemini, ChatGPT, and Claude. Builders acquire information on mannequin interactions from the Chatbot Enviornment API, however groups specializing in open fashions constantly get the brief finish of the stick.

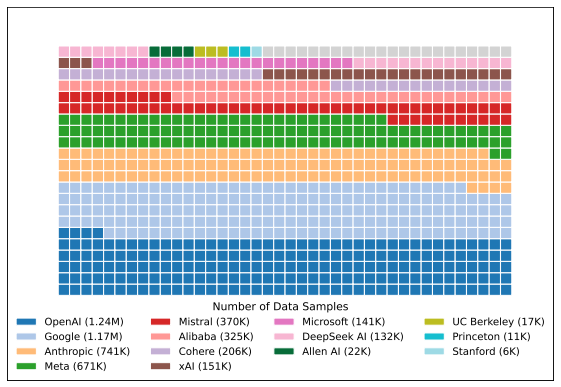

The researchers level out that sure fashions seem in enviornment faceoffs rather more usually, with Google and OpenAI collectively accounting for over 34 % of collected mannequin information. Companies like xAI, Meta, and Amazon are additionally disproportionately represented within the enviornment. Subsequently, these companies get extra vibemarking information in comparison with the makers of open fashions.

Extra fashions, extra evals

The research authors have an inventory of recommendations to make LM Enviornment extra honest. A number of of the paper’s suggestions are geared toward correcting the imbalance of privately examined business fashions, for instance, by limiting the variety of fashions a bunch can add and retract earlier than releasing one. The research additionally suggests displaying all mannequin outcomes, even when they are not ultimate.

Nevertheless, the location’s operators take issue with a few of the paper’s methodology and conclusions. LM Enviornment factors out that the pre-release testing options haven’t been saved secret, with a March 2024 blog post that includes a quick rationalization of the system. Additionally they contend that mannequin creators do not technically select the model that’s proven. As a substitute, the location merely does not present private variations for simplicity’s sake. When a developer releases the ultimate model, that is what LM Enviornment provides to the leaderboard.

Proprietary fashions get disproportionate consideration within the Chatbot Enviornment, the research says.

Credit score:

Shivalika Singh et al.

One place the 2 sides might discover alignment is on the query of unequal matchups. The research authors name for honest sampling, which can guarantee open fashions seem in Chatbot Enviornment at a fee much like the likes of Gemini and ChatGPT. LM Enviornment has instructed it can work to make the sampling algorithm extra assorted so you do not at all times get the large business fashions. That might ship extra eval information to small gamers, giving them the possibility to enhance and problem the large business fashions.

LM Enviornment lately introduced it was forming a company entity to proceed its work. With cash on the desk, the operators want to make sure Chatbot Enviornment continues figuring into the event of fashionable fashions. Nevertheless, it is unclear whether or not that is an objectively higher strategy to consider chatbots versus tutorial checks. As individuals vote on vibes, there’s an actual chance we’re pushing fashions to undertake sycophantic tendencies. This will likely have helped nudge ChatGPT into suck-up territory in current weeks, a transfer that OpenAI has hastily reverted after widespread anger.