Does measurement matter?

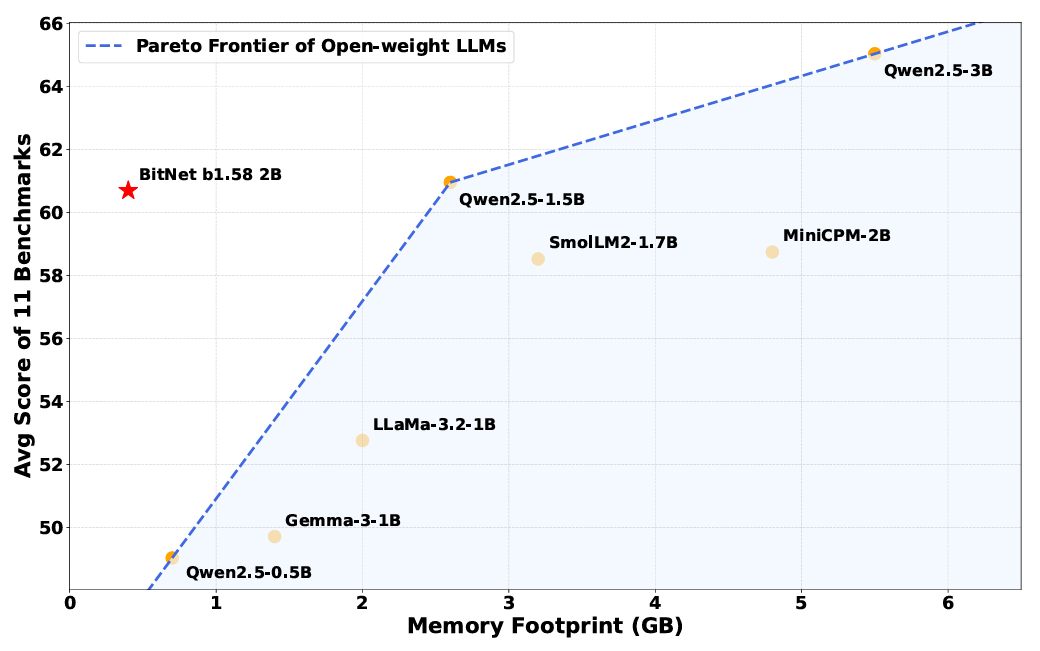

Reminiscence necessities are the obvious benefit of decreasing the complexity of a mannequin’s inside weights. The BitNet b1.58 mannequin can run utilizing simply 0.4GB of reminiscence, in comparison with anyplace from 2 to 5GB for different open-weight fashions of roughly the identical parameter measurement.

However the simplified weighting system additionally results in extra environment friendly operation at inference time, with inside operations that rely way more on easy addition directions and fewer on computationally pricey multiplication directions. These effectivity enhancements imply BitNet b1.58 makes use of anyplace from 85 to 96 % much less power in comparison with comparable full-precision fashions, the researchers estimate.

A demo of BitNet b1.58 operating at pace on an Apple M2 CPU.

By utilizing a highly optimized kernel designed particularly for the BitNet structure, the BitNet b1.58 mannequin may run a number of occasions sooner than comparable fashions operating on an ordinary full-precision transformer. The system is environment friendly sufficient to succeed in “speeds corresponding to human studying (5-7 tokens per second)” utilizing a single CPU, the researchers write (you’ll be able to download and run those optimized kernels yourself on a variety of ARM and x86 CPUs, or strive it utilizing this web demo).

Crucially, the researchers say these enhancements do not come at the price of efficiency on numerous benchmarks testing reasoning, math, and “data” capabilities (though that declare has but to be verified independently). Averaging the outcomes on a number of frequent benchmarks, the researchers discovered that BitNet “achieves capabilities almost on par with main fashions in its measurement class whereas providing dramatically improved effectivity.”

Regardless of its smaller reminiscence footprint, BitNet nonetheless performs equally to “full precision” weighted fashions on many benchmarks.

Regardless of the obvious success of this “proof of idea” BitNet mannequin, the researchers write that they do not fairly perceive why the mannequin works in addition to it does with such simplified weighting. “Delving deeper into the theoretical underpinnings of why 1-bit coaching at scale is efficient stays an open space,” they write. And extra analysis remains to be wanted to get these BitNet fashions to compete with the general measurement and context window “reminiscence” of at present’s largest fashions.

Nonetheless, this new analysis exhibits a possible various method for AI fashions which are dealing with spiraling hardware and energy costs from operating on costly and highly effective GPUs. It is potential that at present’s “full precision” fashions are like muscle automobiles which are losing plenty of power and energy when the equal of a pleasant sub-compact might ship comparable outcomes.