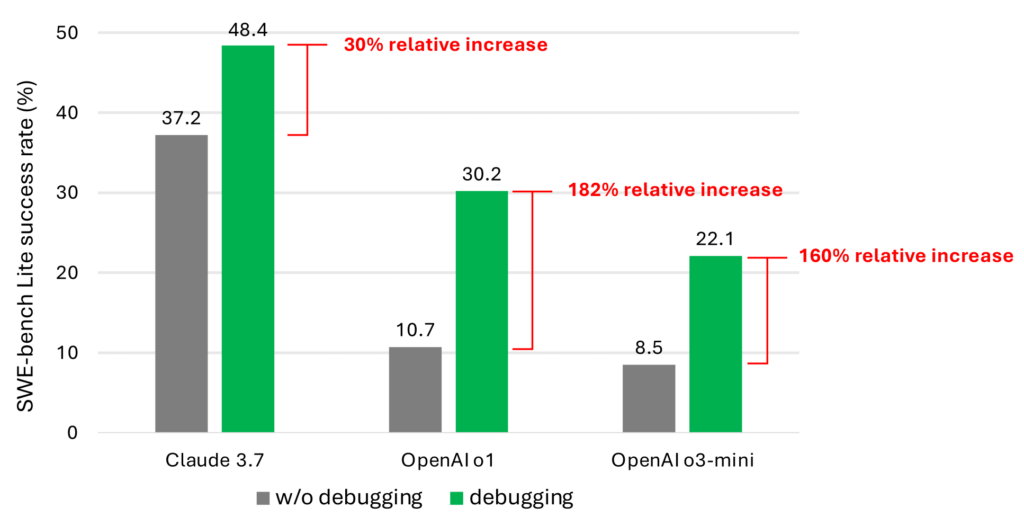

This strategy is rather more profitable than counting on the fashions as they’re normally used, however when your greatest case is a 48.4 p.c success price, you are not prepared for primetime. The restrictions are probably as a result of the fashions do not absolutely perceive how one can greatest use the instruments, and since their present coaching information shouldn’t be tailor-made to this use case.

“We imagine that is because of the shortage of knowledge representing sequential decision-making habits (e.g., debugging traces) within the present LLM coaching corpus,” the weblog publish says. “Nonetheless, the numerous efficiency enchancment… validates that this can be a promising analysis course.”

This preliminary report is simply the beginning of the efforts, the publish claims. The subsequent step is to “fine-tune an info-seeking mannequin specialised in gathering the required data to resolve bugs.” If the mannequin is massive, one of the best transfer to avoid wasting inference prices could also be to “construct a smaller info-seeking mannequin that may present related data to the bigger one.”

This is not the primary time we have seen outcomes that counsel a few of the bold concepts about AI brokers immediately changing builders are fairly removed from actuality. There have been quite a few research already exhibiting that despite the fact that an AI instrument can typically create an utility that appears acceptable to the person for a slim process, the fashions have a tendency to supply code laden with bugs and safety vulnerabilities, they usually aren’t usually able to fixing these issues.

That is an early step on the trail to AI coding brokers, however most researchers agree it stays probably that one of the best end result is an agent that saves a human developer a considerable period of time, not one that may do every thing they’ll do.